Disclaimer

This notebook won't work in Colab, due to an incompatibility with the fiftyone library.

Using Fiftyone in IceVision

Install IceVision

The following downloads and runs a short shell script. The script installs IceVision, IceData, the MMDetection library, and Yolo v5 as well as the fastai and pytorch lightning engines.

Install from pypi...

# Torch - Torchvision - IceVision - IceData - MMDetection - YOLOv5 - EfficientDet Installation

!wget https://raw.githubusercontent.com/airctic/icevision/master/icevision_install.sh

# Choose your installation target: cuda11 or cuda10 or cpu

!bash icevision_install.sh cuda11

... or from icevision master

# # Torch - Torchvision - IceVision - IceData - MMDetection - YOLOv5 - EfficientDet Installation

# !wget https://raw.githubusercontent.com/airctic/icevision/master/icevision_install.sh

# # Choose your installation target: cuda11 or cuda10 or cpu

# !bash icevision_install.sh cuda11 master

fiftyone is not part of IceVision. We need to install it separately.

# Install fiftyone

%pip install fiftyone -U

# Restart kernel after installation

import IPython

IPython.Application.instance().kernel.do_shutdown(True)

Imports

All of the IceVision components can be easily imported with a single line.

from icevision.all import *

from icevision.models import * # Needed for inference later

import icedata # Needed for sample data

import fiftyone as fo

Visualizing datasets

The fiftyone integration of IceVision can be used on either IceVision Datasets or IceVision Prediction. Let's start by visualizing a dataset.

If you don't know fiftyone yet, visit the website and read about the concepts: https://voxel51.com/docs/fiftyone.

Fiftyone Summary

Fiftyone is a tool to analyze datasets and detections of all forms.

For object detection these concepta are most relevant:

Fiftyone is structured into fo.Datasets. So every viewable entity is related to a fo.Dataset. An image is represented by a fo.Sample. After you created a fo.Sample you can add fo.Detections, which is constructed by a list of fo.Detection. Finally, you need to add a fo.Sample to your fo.Dataset and then launch your app by calling fo.launch_app(dataset). IceVision enables you to create all of these fo objects from IceVision classes.

Before we train or execute inference, we need to create an icevision.Dataset. From this dataset, we can create a fo.Dataset.

Since fiftyone operates on filepaths, you dataset needs filepath as component and you cannot use Dataset.from_images as it stores the images in RAM.

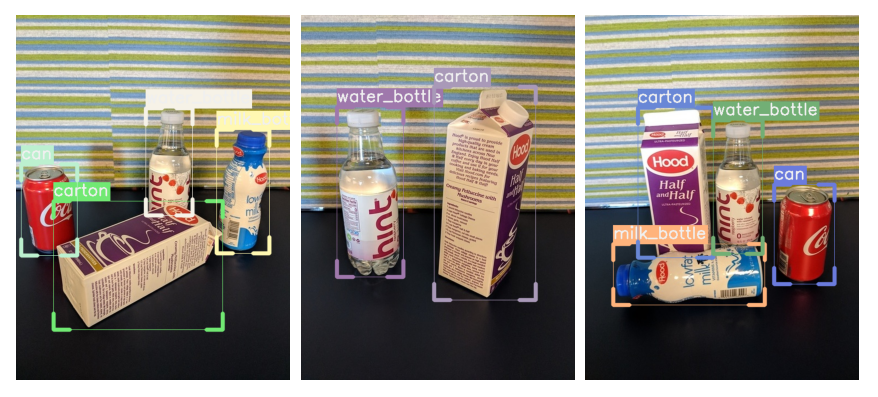

We use the fridge object dataset available from IceData.

# List all available fiftyone datasets on your machine:

fo.list_datasets()

# Download dataset

infer_ds_path = icedata.fridge.load_data()

train_records, valid_records = icedata.fridge.parser(infer_ds_path).parse()

# Set fo dataset name

fo_dataset_name = "inference_dataset"

#RandomSplitter Create fiftyone dataset

fo_dataset = data.create_fo_dataset(valid_records, fo_dataset_name)

# See your new dataset in the lists

fo.list_datasets()

fo.launch_app(fo_dataset)

[]

['inference_dataset']

Dataset: inference_dataset

Media type: image

Num samples: 26

Selected samples: 0

Selected labels: 0

Session URL: http://localhost:5151/

Visualizing predictions

Besides Datasets, you can also visualize predictions by calling the icevision.data.create_fo_dataset with an icevision.Prediction. The icevision.data.create_fo_dataset function allows you to append prediction to an existing dataset by setting the exist_ok argument to True.

When you display images in fiftyone it always refers to the original image. However, Icevision keeps its data already transformed, which means that the bounding box needs to be post-processed to match the original image size. You have two options for this post-processing:

- Either use IceVisions internal function by using the

transformationargument in theicevision.data.create_fo_datasetfunction - Or u

se your custom post-process function by using the

undo_bbox_tfms_fnargument

Loading the fridge model

checkpoint_path = 'https://github.com/airctic/model_zoo/releases/download/m6/fridge-retinanet-checkpoint-full.pth'

checkpoint_and_model = model_from_checkpoint(checkpoint_path)

# Just logging the info

model_type = checkpoint_and_model["model_type"]

backbone = checkpoint_and_model["backbone"]

class_map = checkpoint_and_model["class_map"]

img_size = checkpoint_and_model["img_size"]

model_type, backbone, class_map, img_size

# Inference

# Model

model = checkpoint_and_model["model"]

# Transforms

img_size = checkpoint_and_model["img_size"]

valid_tfms = tfms.A.Adapter([*tfms.A.resize_and_pad(img_size), tfms.A.Normalize()])

# Create dataset

infer_ds = Dataset(valid_records, valid_tfms)

# Batch Inference

infer_dl = model_type.infer_dl(infer_ds, batch_size=4, shuffle=False)

preds = model_type.predict_from_dl(model, infer_dl, keep_images=True)

# Lets add the predictions to our prevoius dataset.

fo_dataset = data.create_fo_dataset(detections=preds,

dataset_name=fo_dataset_name,

exist_ok=True,

transformations=valid_tfms.tfms_list) # Use IceVisions automatic postprocess bbox function by adding the tfms_list

# List datasets, to see that no new is created

fo.list_datasets()

fo.launch_app(fo_dataset)

['inference_dataset']

Dataset: inference_dataset

Media type: image

Num samples: 52

Selected samples: 0

Selected labels: 0

Session URL: http://localhost:5151/

Merging samples

You can see that images are not automatically matched by filepaths. Therefore use fo.Dataset.merge_samples.

Create your own fo.Dataset with fo.Sample

This is handy if you need more control over individual samples. For example, you want to compare several training runs, you can create your own fo.Sample and add records manually to avoid merging samples afterward.

We provide 2 functions to create fo.Samples:

data.convert_prediction_to_fo_sampledata.convert_record_to_fo_sample

# Create custom dataset

custom_fo_dataset_name = "custom_fo_dataset"

custom_dataset = fo.Dataset(custom_fo_dataset_name)

# Load dataset

infer_ds_path = icedata.fridge.load_data()

train_records, valid_records = icedata.fridge.parser(infer_ds_path).parse()

sample_list = []

# Iter over dataset and create samples based on records

# The field_name refers to the fields of fiftyone, which is used to structure samples in fiftyone

for record in train_records[:1]:

sample = fo.Sample(record.common.filepath) # Create sample and use it in the function below

sample_list.append(data.convert_record_to_fo_sample(record=record,

field_name="train_set",

sample=sample)

)

# Prediction have their own convert function

for pred in preds[:1]:

sample_list.append(data.convert_prediction_to_fo_sample(prediction=pred, transformations=valid_tfms.tfms_list))

# Print our samples

print(sample_list)

# Add our samples to the dataset

custom_dataset.add_samples(samples=sample_list)

fo.launch_app(custom_dataset)

0%| | 0/128 [00:00<?, ?it/s]

[1m[1mINFO [0m[1m[0m - [1m[34m[1mAutofixing records[0m[1m[34m[0m[1m[0m | [36micevision.parsers.parser[0m:[36mparse[0m:[36m122[0m

0%| | 0/128 [00:00<?, ?it/s]

[<Sample: {

'id': None,

'media_type': 'image',

'filepath': '/home/laurenz/.icevision/data/fridge/odFridgeObjects/images/94.jpg',

'tags': [],

'metadata': None,

'train_set': <Detections: {

'detections': BaseList([

<Detection: {

'id': '61efa9f66ae4a8a68dd47c92',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'carton',

'bounding_box': BaseList([

0.036072144288577156,

0.3033033033033033,

0.3226452905811623,

0.506006006006006,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c93',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'water_bottle',

'bounding_box': BaseList([

0.32665330661322645,

0.3633633633633634,

0.21442885771543085,

0.42492492492492495,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c94',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'can',

'bounding_box': BaseList([

0.5210420841683366,

0.503003003003003,

0.20641282565130262,

0.2747747747747748,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c95',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'milk_bottle',

'bounding_box': BaseList([

0.7034068136272545,

0.3963963963963964,

0.20240480961923848,

0.3768768768768769,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

]),

}>,

}>, <Sample: {

'id': None,

'media_type': 'image',

'filepath': '/home/laurenz/.icevision/data/fridge/odFridgeObjects/images/76.jpg',

'tags': [],

'metadata': None,

'ground_truth': <Detections: {

'detections': BaseList([

<Detection: {

'id': '61efa9f66ae4a8a68dd47c96',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'milk_bottle',

'bounding_box': BaseList([

0.06212424849699399,

0.36936936936936937,

0.24248496993987975,

0.3978978978978979,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c97',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'carton',

'bounding_box': BaseList([

0.218436873747495,

0.23423423423423423,

0.2565130260521042,

0.4519519519519519,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c98',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'water_bottle',

'bounding_box': BaseList([

0.11022044088176353,

0.5870870870870871,

0.593186372745491,

0.3078078078078078,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c99',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'can',

'bounding_box': BaseList([

0.6933867735470942,

0.44744744744744747,

0.2124248496993988,

0.2627627627627628,

]),

'mask': None,

'confidence': None,

'index': None,

}>,

]),

}>,

'prediction': <Detections: {

'detections': BaseList([

<Detection: {

'id': '61efa9f66ae4a8a68dd47c9a',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'milk_bottle',

'bounding_box': BaseList([

0.21442885771543085,

0.23123123123123124,

0.26452905811623245,

0.46396396396396394,

]),

'mask': None,

'confidence': 0.9985953,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c9b',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'carton',

'bounding_box': BaseList([

0.06212424849699399,

0.36936936936936937,

0.24248496993987975,

0.4009009009009009,

]),

'mask': None,

'confidence': 0.99891174,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c9c',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'can',

'bounding_box': BaseList([

0.6973947895791583,

0.44744744744744747,

0.20440881763527055,

0.2627627627627628,

]),

'mask': None,

'confidence': 0.9997763,

'index': None,

}>,

<Detection: {

'id': '61efa9f66ae4a8a68dd47c9d',

'attributes': BaseDict({}),

'tags': BaseList([]),

'label': 'water_bottle',

'bounding_box': BaseList([

0.11022044088176353,

0.5855855855855856,

0.593186372745491,

0.3153153153153153,

]),

'mask': None,

'confidence': 0.9862601,

'index': None,

}>,

]),

}>,

}>]

['61efa9f66ae4a8a68dd47c9e', '61efa9f66ae4a8a68dd47ca3']

Dataset: custom_fo_dataset

Media type: image

Num samples: 2

Selected samples: 0

Selected labels: 0

Session URL: http://localhost:5151/

Cleanup

fo.delete_dataset(fo_dataset_name)

fo.delete_dataset(custom_fo_dataset_name)

Happy Learning!

If you need any assistance, feel free to join our forum.