IceVision meets W&B

IceVision + W&B = Agnostic Object Detection Framework with Outstanding Experiments Tracking

IceVision fully supports W&B by providing a one-liner API that enables users to track their trained models and display both the predicted and ground truth bounding boxes.

W&B makes visualizing and tracking different models performance a highly enjoyable task. Indeed, we are able to monitor the performance of several EfficientDet backbones by changing few lines of code and obtaining very intuitive and easy-to-interpret figures that highlights both the similarities and differences between the different backbones.

For more information check the Report. Note, however, that the report refers to an older version of IceVision. This tutorial is updated for IceVision 0.7.

This tutorial emphasizes the additional work required to integrated W&B. If you are new to IceVision, then we suggest that you look at the getting started with object detection tutorial.

In this tutorial, we are using the fastai library training loop, the efficientdet object detection model, and a sample dataset with images of objects that you might find in a fridge. Following the usual practice with IceVision, you can use W&B with other training loops, model libraries, models and backbones. The W&B specific lines below would not need to be changed.

Install IceVision and IceData

If on Colab run the following cell, else check the installation instructions

Install from pypi...

# # Torch - Torchvision - IceVision - IceData - MMDetection - YOLOv5 - EfficientDet Installation

# !wget https://raw.githubusercontent.com/airctic/icevision/master/icevision_install.sh

# # Choose your installation target: cuda11 or cuda10 or cpu

# !bash icevision_install.sh cuda11

... or from icevision master

# Torch - Torchvision - IceVision - IceData - MMDetection - YOLOv5 - EfficientDet Installation

!wget https://raw.githubusercontent.com/airctic/icevision/master/icevision_install.sh

# Choose your installation target: cuda11 or cuda10 or cpu

!bash icevision_install.sh cuda11 master

# Restart kernel after installation

import IPython

IPython.Application.instance().kernel.do_shutdown(True)

{'restart': True, 'status': 'ok'}

Imports

from icevision.all import *

from fastai.callback.wandb import *

from fastai.callback.tracker import SaveModelCallback

[1m[1mINFO [0m[1m[0m - [1mDownloading default `.ttf` font file - SpaceGrotesk-Medium.ttf from https://raw.githubusercontent.com/airctic/storage/master/SpaceGrotesk-Medium.ttf to /root/.icevision/fonts/SpaceGrotesk-Medium.ttf[0m | [36micevision.visualize.utils[0m:[36mget_default_font[0m:[36m70[0m

[1m[1mINFO [0m[1m[0m - [1mDownloading mmdet configs[0m | [36micevision.models.mmdet.download_configs[0m:[36mdownload_mmdet_configs[0m:[36m31[0m

0B [00:00, ?B/s]

Downloading https://ultralytics.com/assets/Arial.ttf to /root/.config/Ultralytics/Arial.ttf...

Load the Fridge Objects dataset

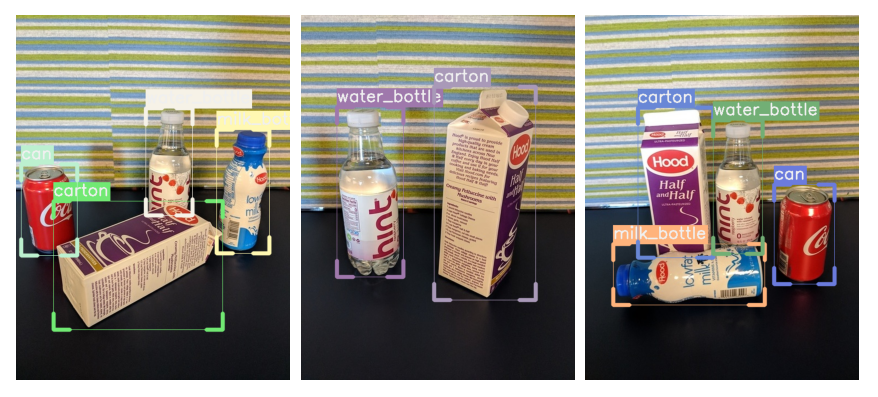

The fridge Objects dataset is tiny dataset that contains 134 images of 4 classes: - can, - carton, - milk bottle, - water bottle.

IceVision provides very handy methods such as loading a dataset, parsing annotations, and more.

# Dataset

url = "https://cvbp-secondary.z19.web.core.windows.net/datasets/object_detection/odFridgeObjects.zip"

dest_dir = "fridge"

data_dir = icedata.load_data(url, dest_dir, force_download=True)

# Create the parser

parser = parsers.VOCBBoxParser(annotations_dir=data_dir / "odFridgeObjects/annotations", images_dir=data_dir / "odFridgeObjects/images")

# Parse annotations to create records

train_records, valid_records = parser.parse()

parser.class_map

0%| | 0/20380998 [00:00<?, ?B/s]

0%| | 0/128 [00:00<?, ?it/s]

[1m[1mINFO [0m[1m[0m - [1m[34m[1mAutofixing records[0m[1m[34m[0m[1m[0m | [36micevision.parsers.parser[0m:[36mparse[0m:[36m122[0m

0%| | 0/128 [00:00<?, ?it/s]

<ClassMap: {'background': 0, 'carton': 1, 'milk_bottle': 2, 'can': 3, 'water_bottle': 4}>

Train and Validation Datasets

# Transforms

image_size = 384

train_tfms = tfms.A.Adapter([*tfms.A.aug_tfms(size=image_size, presize=512), tfms.A.Normalize()])

valid_tfms = tfms.A.Adapter([*tfms.A.resize_and_pad(image_size), tfms.A.Normalize()])

# Datasets

train_ds = Dataset(train_records, train_tfms)

valid_ds = Dataset(valid_records, valid_tfms)

Create the model

In IceVision, we need to select the model type and backbone. For this tutorial, we are selecting efficientdet and the tf_lite0 backbone. Some models require additional information, such as the image_size.

# Library and model selection

model_type = models.ross.efficientdet

backbone = model_type.backbones.tf_lite0(pretrained=True)

# The efficientdet model requires an img_size parameter

extra_args = {'img_size' : image_size}

model = model_type.model(backbone=backbone, num_classes=len(parser.class_map), **extra_args)

Downloading: "https://github.com/rwightman/efficientdet-pytorch/releases/download/v0.1/tf_efficientdet_lite0-f5f303a9.pth" to /root/.cache/torch/hub/checkpoints/tf_efficientdet_lite0-f5f303a9.pth

Create the dataloaders

The dataloaders differ somewhat across the model_types, so creating them comes after selecting the model type.

# DataLoaders

train_dl = model_type.train_dl(train_ds, batch_size=16, num_workers=4, shuffle=True)

valid_dl = model_type.valid_dl(valid_ds, batch_size=16, num_workers=4, shuffle=False)

/usr/local/lib/python3.7/dist-packages/torch/utils/data/dataloader.py:481: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

cpuset_checked))

Training

Intialize W&B

At this point, we initialize W&B. This works in the jupyter notebook, but it is more typical run W&B from within a programme. This is partly because it enables you to track the progress of your training jobs from a custom dashboard from your browser, tablet, or phone. The full interface also makes it easy to compare multiple training runs, which can be very powerful when combined with IceVision. You can easily see which model is best suited to your problem.

Initializing is a single line from the W&B library.

wandb.init(project="icevision-fridge", name="efficientdet_tf_lite0", reinit=True)

Create the learner

This tutorial is usingfastai, but IceVision lets you us other frameworks such as pytorch-lightning.

In order to use W&B within fastai, you need to specify the WandbCallback, which results in logging the metrics as well as other key parameters, as well as the SaveModelCallback, which enables W&B to log the models. Logging the model is very powerful, as it ensures that you have a copy of the best version of the model as you train. If you are using W&B on-line, however, it causes your model to be transferred to the W&B database as well as saved in a local wandb directory.

learn = model_type.fastai.learner(dls=[train_dl, valid_dl], model=model,

metrics=[COCOMetric(metric_type=COCOMetricType.bbox)],

cbs=[WandbCallback(), SaveModelCallback()])

Train

In this case, we use the fit_one_cycle training method from fastai, which uses a specific policy for adjusting the learning rate. This model is likely to take around 2-10 seconds per epoch, depending on your hardware. Training for 30 epochs on this small dataset typically reaches a level around 0.8 (COCOMetric), which is sufficient for our demonstration purposes and saves some time.

learn.fit_one_cycle(30, 1e-2)

Could not gather input dimensions

WandbCallback was not able to prepare a DataLoader for logging prediction samples -> 'Dataset' object has no attribute 'items'

| epoch | train_loss | valid_loss | COCOMetric | time |

|---|---|---|---|---|

| 0 | 1.940700 | 1.267195 | 0.000069 | 00:12 |

| 1 | 1.685492 | 1.271025 | 0.000023 | 00:07 |

| 2 | 1.540221 | 1.264216 | 0.017094 | 00:07 |

| 3 | 1.424315 | 1.147959 | 0.155413 | 00:07 |

| 4 | 1.303290 | 1.082212 | 0.218895 | 00:07 |

| 5 | 1.184958 | 0.875454 | 0.310934 | 00:07 |

| 6 | 1.083239 | 0.831463 | 0.260008 | 00:07 |

| 7 | 0.993147 | 0.897370 | 0.278169 | 00:07 |

| 8 | 0.912498 | 0.779531 | 0.356216 | 00:07 |

| 9 | 0.844710 | 0.740413 | 0.360409 | 00:07 |

| 10 | 0.782819 | 0.723692 | 0.377731 | 00:07 |

| 11 | 0.729454 | 0.745544 | 0.323658 | 00:07 |

| 12 | 0.687574 | 0.653606 | 0.413525 | 00:07 |

| 13 | 0.651915 | 0.655265 | 0.417471 | 00:07 |

| 14 | 0.620716 | 0.651639 | 0.429272 | 00:07 |

| 15 | 0.589860 | 0.543764 | 0.468006 | 00:07 |

| 16 | 0.559970 | 0.516403 | 0.574289 | 00:07 |

| 17 | 0.531339 | 0.510701 | 0.534853 | 00:07 |

| 18 | 0.504182 | 0.490539 | 0.590217 | 00:07 |

| 19 | 0.482276 | 0.391926 | 0.669296 | 00:07 |

| 20 | 0.460442 | 0.391556 | 0.693068 | 00:07 |

| 21 | 0.444368 | 0.344799 | 0.738415 | 00:07 |

| 22 | 0.425590 | 0.352732 | 0.725862 | 00:07 |

| 23 | 0.409553 | 0.319861 | 0.769296 | 00:07 |

| 24 | 0.395036 | 0.308085 | 0.769391 | 00:07 |

| 25 | 0.383020 | 0.302642 | 0.775355 | 00:07 |

| 26 | 0.372328 | 0.293886 | 0.785769 | 00:07 |

| 27 | 0.362688 | 0.292389 | 0.796928 | 00:07 |

| 28 | 0.355098 | 0.289134 | 0.799669 | 00:07 |

| 29 | 0.347778 | 0.283973 | 0.806622 | 00:07 |

/usr/local/lib/python3.7/dist-packages/effdet/bench.py:45: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor').

indices_all = cls_topk_indices_all // num_classes

Better model found at epoch 0 with valid_loss value: 1.2671946287155151.

Better model found at epoch 2 with valid_loss value: 1.2642161846160889.

Better model found at epoch 3 with valid_loss value: 1.1479589939117432.

Better model found at epoch 4 with valid_loss value: 1.0822120904922485.

Better model found at epoch 5 with valid_loss value: 0.8754537105560303.

Better model found at epoch 6 with valid_loss value: 0.8314631581306458.

Better model found at epoch 8 with valid_loss value: 0.7795311808586121.

Better model found at epoch 9 with valid_loss value: 0.7404130697250366.

Better model found at epoch 10 with valid_loss value: 0.7236919403076172.

Better model found at epoch 12 with valid_loss value: 0.6536057591438293.

Better model found at epoch 14 with valid_loss value: 0.6516388654708862.

Better model found at epoch 15 with valid_loss value: 0.5437638759613037.

Better model found at epoch 16 with valid_loss value: 0.5164031982421875.

Better model found at epoch 17 with valid_loss value: 0.5107009410858154.

Better model found at epoch 18 with valid_loss value: 0.4905391037464142.

Better model found at epoch 19 with valid_loss value: 0.3919256627559662.

Better model found at epoch 20 with valid_loss value: 0.3915559649467468.

Better model found at epoch 21 with valid_loss value: 0.34479889273643494.

Better model found at epoch 23 with valid_loss value: 0.3198612332344055.

Better model found at epoch 24 with valid_loss value: 0.3080853819847107.

Better model found at epoch 25 with valid_loss value: 0.302642285823822.

Better model found at epoch 26 with valid_loss value: 0.29388612508773804.

Better model found at epoch 27 with valid_loss value: 0.2923893630504608.

Better model found at epoch 28 with valid_loss value: 0.2891344130039215.

Better model found at epoch 29 with valid_loss value: 0.28397276997566223.

Show results

We can now look athe results of the training in the notebook.

model_type.show_results(model, valid_ds)

/usr/local/lib/python3.7/dist-packages/effdet/bench.py:45: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor').

indices_all = cls_topk_indices_all // num_classes

Get predictions

Let's get the list of predictions from our model. We do this by creating an infer_dl - a dataloader used for inference and then getting predictions from the data loader.

Please note the keep_images=True. By default, the predictions include scores, labels, and bounding boxes. In our case, we want to keep the images so that we log them to W&B.

infer_dl = model_type.infer_dl(valid_ds, batch_size=8)

preds = model_type.predict_from_dl(model=model, infer_dl=infer_dl, keep_images=True)

0%| | 0/4 [00:00<?, ?it/s]

/usr/local/lib/python3.7/dist-packages/effdet/bench.py:45: UserWarning: __floordiv__ is deprecated, and its behavior will change in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values. To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor').

indices_all = cls_topk_indices_all // num_classes

Log results to W&B

Now comes the most important bit of this tutorial - actually logging the predictions to W&B. This takes one line specific to icevision and a second line to send the information to W&B.

# Create wandb_images for each prediction

wandb_images = wandb_img_preds(preds, add_ground_truth=True)

# Log the wandb_images to wandb

wandb.log({"Predicted images": wandb_images})

After logging and finishing the training, it is good to mark the run as completed. This can take a few seconds, as we wait for the W&B processes to transfer data and finalize logging.

# optional: mark the run as completed

wandb.join()

Happy Learning!

If you need any assistance, feel free to join our forum.